Power BI Server has it’s use cases, but it also has some gaps where it falls short of the functionality in the Power BI Service (app.PowerBI.com), but one of the major gaps is that it doesn’t notify anybody when a report refresh fails. Report owners are left either reviewing the reports every time they’re scheduled to ensure the data is refreshed, creating a report that shows refresh status (and hoping it actually refreshes itself), or implementing their own homebrew notification solution.

I opted for #3 – creating a custom notification process – and I want to share it with anybody else that’s wondering how to set up notifications for their own internal Power BI Server instance. If you just want the script, jump to the end for the link and variable instructions.

The process has a few high-level parts:

- Query for failed report refreshes. Check the report catalog and refresh history to find reports where the last refresh attempt failed within the monitoring window. If a report has already been refreshed again and succeeded, or is currently being refreshed, I chose not to alert on these – this meant somebody was already on it, and we wanted to see reports that needed attention and were currently failed, not the actual failures themselves.

- Create the email notification text. This is a multi-step process that inserts a header, builds a table with details on the reports, and then a footer with instructions and additional details.

- Actually send the email. Don’t forget to do this part 🙂 We’ll use DBMail to send it.

- SQL Agent job that runs the notification code. This is the recurring schedule that checks for failures. We chose to run it every 5 minutes, so that’s what I have it set up for below (it can be customized as needed for any interval).

CAVEAT BEFORE WE START: We’ll be querying the PBI Report Server database, which could change at any time and is totally unsupported. I’ve never seen any issues caused by it and I’ve never had a table/view change from under code I’ve written to do this, but you’ve been warned. However, we’re only reading – don’t make any changes or write to this database or you could break your PBIRS installation. If you want to learn more about this in general, check out this write-up.

Query for failed report refreshes

We’ll start by all the last successful refreshes for our reports. The process doesn’t actively use this, but it does keep it so that reports that have never been refreshed or where they’ve not been refreshed successfully for X time can be called out in the future:

SELECT [SubscriptionID]

,max([EndTime]) as LastSuccessTime

FROM [YourPBIRSDatabase].[dbo].[SubscriptionHistory]

WHERE Status = 0 -- Success ("Completed Data Refresh")

GROUP BY SubscriptionIDThe [SubscriptionHistory] table contains all the records for all scheduled refreshes, regardless of success or failure. In this case, we’re filtering for “Status = 0”, which is success – other numbers are error codes.

To grab a list of all the reports and their refresh status, we’ll use two more tables: [Catalog] (which contains a list of all the reports) and [Subscriptions] (which contains the scheduled refresh jobs). Where [SubscriptionHistory] is the log of all the job executions, [Subscriptions] contains a list of every scheduled refresh that’s set up. One of the nice things about this table is that it also contains details about the current state of the schedule – if the last run was successful or a failure, and details on the last error if there was one.

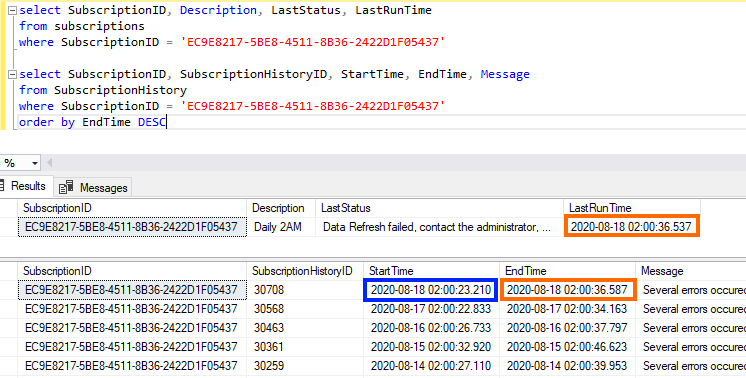

[Subscriptions] also contains a column called [LastRunTime] with a timestamp when the schedule was last run. What’s handy here is that it’s a timestamp for the last STATUS of the schedule, not the time the last refresh started. Because of that, it will contain the timestamp of the end of execution in case of a failure. Here’s how they link together (the boxes in orange match, or match close enough to show that it’s end time and not start time):

When we join those together, here’s what we get (you’ll start to see some variables here – there script has a large block at the top where you can use customize the script behavior):

select LEFT(c.Path, LEN(c.Path) - LEN(c.Name)) as Path,

c.Name,

@URLRootDataRefresh + Path as DataRefreshURL,

s.Description, s.LastStatus, LastRunTime, ls.LastSuccessTime

into #workingtable

from [dbo].[Subscriptions] s

join dbo.[Catalog] c

on s.Report_OID = c.ItemID

where s.LastStatus not in ('Completed Data Refresh', 'New Scheduled Refresh Plan', 'Refreshing Data')

and c.path not like @IgnorePath

and DATEDIFF(ss, LastRunTime, GETDATE()) < @ThresholdSeconds The first filter in the WHERE clause filters our reports that are either refreshing now or last refreshed successfully. The second allows you to use a variable to exclude a path (we keep our retired reports in a hidden folder and didn’t want them triggering an alert) – this is optional. The third filter only returns reports that failed within the last X seconds – this should match how often the job runs to check for new failures so that it’s only getting failures since the last execution. A more advanced version could check to see when the SQL Agent job last ran and get failures since then, but that wasn’t needed in our case.

There’s also a column called DataRefreshURL for each report – this will link directly to the “Scheduled Refresh” settings page for the report, and uses the report management page under (yourserver)/reports/manage/catalogitem/listcaching_pbi/(yourreport). This will allow you to go right from the email to the page that lets you view error details and refresh the report again if the error is temporary.

Create the email notification text

Now that we have a temp table (#WorkingTable) that’s holding our failed reports, let’s build an email!

I wanted to line things up neatly in columns, so the first step was figuring out how wide each column should be:

SET @LongestName = (SELECT MAX(LEN(Name)) FROM #workingtable)

SET @LongestPath = (SELECT MAX(LEN(path)) FROM #workingtable)

set @LongestLink = (SELECT MAX(LEN(DataRefreshURL)) FROM #workingtable)With those lengths set, we can build the headers. For each of the three columns, we’ll include the column title and then enough spaces to justify each column evenly – we’ll use the same formula for the detail lines as we will for the header:

SELECT @Body = @Body + ('Report Title:' + SPACE(@LongestName - 13 + 2))

+ ('Path:' + SPACE(@LongestPath - 5 + 2))

+ ('Refresh Status Link:' + SPACE(@LongestLink - 20))

+ @CRLF

+ REPLICATE('-', @LongestName) + SPACE(2)

+ REPLICATE('-', @LongestPath) + SPACE(2)

+ REPLICATE('-', @LongestLink)

+ @CRLF

This uses both SPACE and REPLICATE to include a certain number of characters to ensure the final text is evenly column justified. There’s also an @CRLF variable that’s set at the beginning of the script – whenever I’m building multi-line text, I always create this variable as it makes breaking lines apart much, much easier further down and allows you to keep formatting consistent.

The line items are built much the same way, though here’s another handy trick that may be new to you. In the SELECT below, the @Body variable is getting appended by every single row in the resultset – the product of this is a variable that contains one additional line for every report in our table. This is also useful when you want to create a variable that contains everything in a set from a table – not just when building multi-line text, but you can consolidate anything from a list to a variable.

SELECT @Body = @Body + Name + SPACE(@LongestName - LEN(Name) + 2)

+ Path + SPACE(@LongestPath - LEN(Path) + 2)

+ DataRefreshURL

+ @CRLF

FROM #workingtable

ORDER BY Name, Path

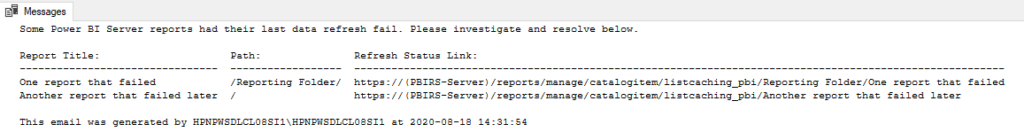

We’re also padding these values based on the “Longest” variables we set earlier – the result is something that looks like this:

At the end, I encourage you to add a line that includes the server that’s sending the email and, if applicable, a link to any documentation – I’ve gotten into a situation before where we were getting an alert every few minutes that was being generated by some T-SQL code somewhere and we had to go through every job on every one of dozens of servers to find it. We eventually resolved the cause, but not before we’d gotten over 1000 emails each about how it was broken! Here’s something simple:

SET @Body = @Body + 'This email was generated by ' + @@SERVERNAME + ' at ' + CONVERT(VARCHAR, GETDATE(), 120)

Actually send the email

This part is simple and uses the sp_send_dbmail system stored procedure. It’s pretty straightforward – everything you need is defined in a variable earlier in the script, so you just need to call the SP:

EXEC msdb.dbo.sp_send_dbmail

@profile_name = @DBMailProfile,

@recipients = @ToAddresses,

@copy_recipients = @CopyAddresses,

@Blind_copy_recipients = @Blind_copy_recipients,

@subject = @Subject,

@body = @Body

Since you’ll be running this code from a SQL Agent job, make sure that the account you’re using for SQL Agent (or the proxy account if you’re configured one) has this permission granted. From the Microsoft docs about sp_send_dbmail:

Execute permissions for sp_send_dbmail default to all members of the DatabaseMailUser database role in the msdb database.

If you don’t have those permissions, you’ll get a moderately cryptic error and your mail won’t send.

SQL Agent job that runs the notification code

Once your script is set up, you need to schedule the job – to do that, follow any walk-through on creating a SQL Agent job – I like the one here: https://www.c-sharpcorner.com/UploadFile/raj1979/create-and-schedule-a-job-in-sql-server-2008/

For the “Steps Tab” from that walk-through, you’ll want to paste the script you’ve set up (once you update all the variables to match your environment). For the “Schedules Tab” step, set the job to run every X seconds, where X is the same number of seconds you set in your variables (the default in the script is 300 seconds, or 5 minutes). The notification/alerts are optional, but you can set those up if you like.

Cool – so what do I need to do?

To use the script, the minimum you need to do is set the variables at the beginning to match your environment and then schedule the agent job. Here’s the default variable block from the script:

DECLARE @ThresholdSeconds INT = 300,

@ToAddresses VARCHAR(1000) = 'You@SomeDomain.com,

@CopyAddresses VARCHAR(1000) = NULL,

@Blind_copy_recipients VARCHAR(1000) = NULL,

@URLRootDataRefresh VARCHAR(1000) = 'https://(yourpbiserver)/reports',

@IgnorePath VARCHAR(1000) = '/IgnoreThisFolder/%',

@DBMailProfile VARCHAR(500) = 'Your DBMail Profile',

@Subject VARCHAR(1000) = 'Failed Power BI Refresh Alert',

@SendEmail BIT = 0Here’s what these are used for and when you should make changes:

@ThresholdSeconds. This is how far back you want to check for report failures. This setting should match how often you run the SQL Agent job – if they values don’t match, then you run the risk of missing some failed reports.@ToAddresses. A list of people you want to email that should probably do somethign about this, separated by semi-colons.@CopyAddresses. Like To, but more of a heads up – leave this NULL if not applicable.@Blind_Copy_Recipients. We include this so we can send an email to our team’s slack channel using email integration, but we don’t want this email address to get out to anybody that gets copied. Normally NULL, but some may have a special need like this.@URLRootDataRefresh. This should be the root of your Power BI Server address, including the “/reports” at the end. If you visit this URL, you should be redirected to the root of your reporting site to browse reports. This URL is appended with the location of the subscription settings so you can browse directly to the details of a failure.@IgnorePath. We had a folder where we store offline reports – failures here should be ignored. If you have multiple folders like this, you can modify this variable to add them or you can modify the query in the script to exclude these folders explicitly.@DBMailProfile. The name of the profile for sending mail. If you haven’t set up DB Mail, refer to the documentation to get this set up and name your profile. Here another good walk-through.@Subject. Make this sufficiently scary so that recipients pay attention!@SendMail. This is set up so you can test, and it’s defaulted to 0 (meaning the script will print your mail body rather than sending it). Once you’re happy with your settings, change this to 1 so the mail actually gets sent.

Download the full script for yourself

Feedback/Suggestions?

Feel free to add a comment below – I’ll update this code as people make suggestions or it improves through my own experience using it, and I hope it helps some others who miss this functionality in Power BI Server. Share below if you end up using it – I’d love to hear about it!